At the recent RISC-V North America summit, NVIDIA’s Vice President of Multimedia Architecture, Frans Sijstermans gave his insight into why NVIDIA chose RISC-V as the architecture for its embedded microcontrollers and how it has become an important part of enabling their product success.

Background: NVIDIA From Zero to One Billion RISC-V Cores Shipped

NVIDIA’s history with RISC-V dates back to 2016, when the company began transitioning its internal Falcon microprocessor, used as a logic controller for GPU products, to a new alternative. After evaluating available architectures, NVIDIA opted for the RISC-V ISA and since then has been adding RISC-V microcontrollers to their product and switching out the legacy Falcons.

Over its 10-year lifespan, NVIDIA estimated to have shipped around 3 billion Falcon processors and that the transition would eventually lead to billions of RISC-V processors being shipped. Typically, each NVIDIA chipset includes between 10 and 40 RISC-V cores depending on the configuration. In 2024, based on the total number of chips shipped, they exceeded the 1 Billion RISC-V processors mark.

NVIDIA has been a proactive member of the RISC-V community since the inaugural community meetings and has had a near-continual representation at board level. They participate in many of the technical working groups to both contribute and share their work, as well as benefiting from the shared contributions of other community members, while also participating in the RISE software organization.

Nevertheless, NVIDIA is not often associated with RISC-V and this is probably because much of its work is done in-house and, although important, tends to be related to the non-customer-facing aspect of their products These can be divided into three key areas where RISC-V plays a significant part in the NVIDIA product portfolio:

- Function Level Controllers including Video Codecs, Displays, Cameras, Memory Controllers (training), Chip2Chip Interfaces, and Context-switch

- Chip/System Level Control including resource management, power management, and security

- Data Processing including packet routing in networking and activation and other DL network layers in DLA (not GPU)

NVIDIA’s RISC-V Cores

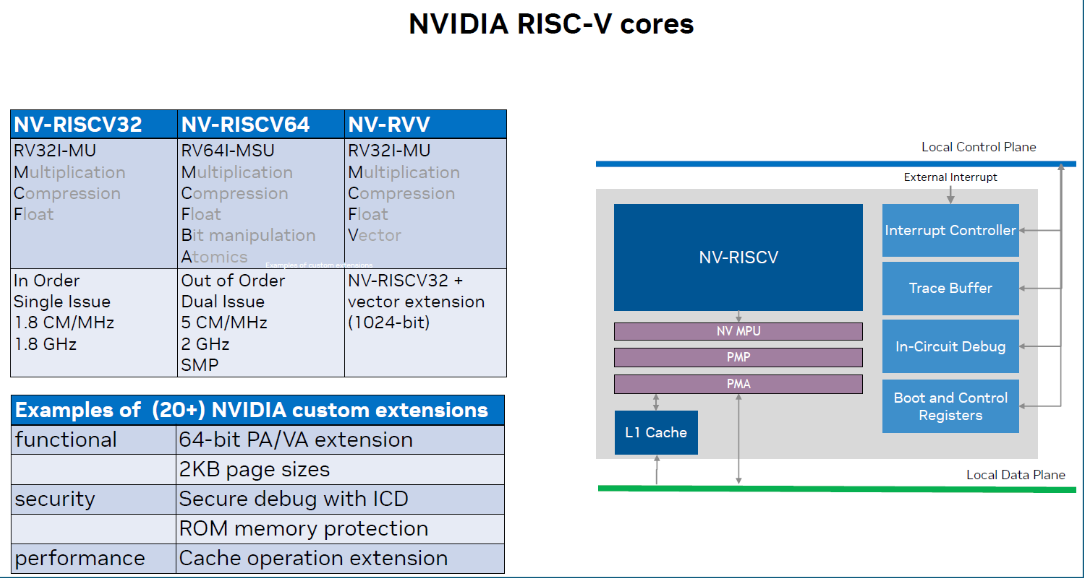

NVIDIA’s transition to RISC-V from the 32-bit only Falcon core was initially driven by a need for 64-bit capability. Their first RISC-V development was a fairly ordinary dual issue out-of-order RISC-V core with standard extensions that is deployable as a multi-processor version. Subsequently, they added a 32-bit version for area-constrained applications plus a vector processor with a 1024-bit vector unit.

NVIDIA also developed several custom extensions, some specific to NVIDIA and others beneficial for general users. For example, the 2kB page size extension is unique to NVIDIA and improves legacy software performance by 50%. Similarly, the 64-bit addresses extension is useful in very big systems, such as data centers, where the memory is distributed and can be distant.

Conversely, their pointer masking extension has useful potential for safety and security applications across the wider community. NVIDIA thus submitted the extension to the RISC-V standard where it has been approved and is now used by numerous community members.

NVIDIA has additional extensions enabling general functionality, security, and performance, and while none of these are particularly advanced, they are of critical importance for their overall system.

A Subsystem enabled by RISC-V

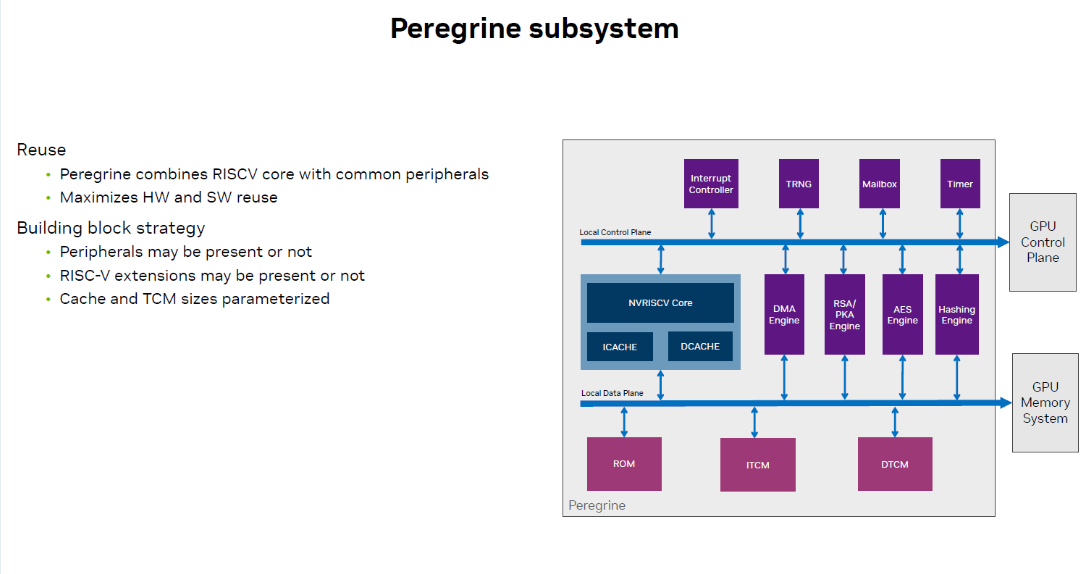

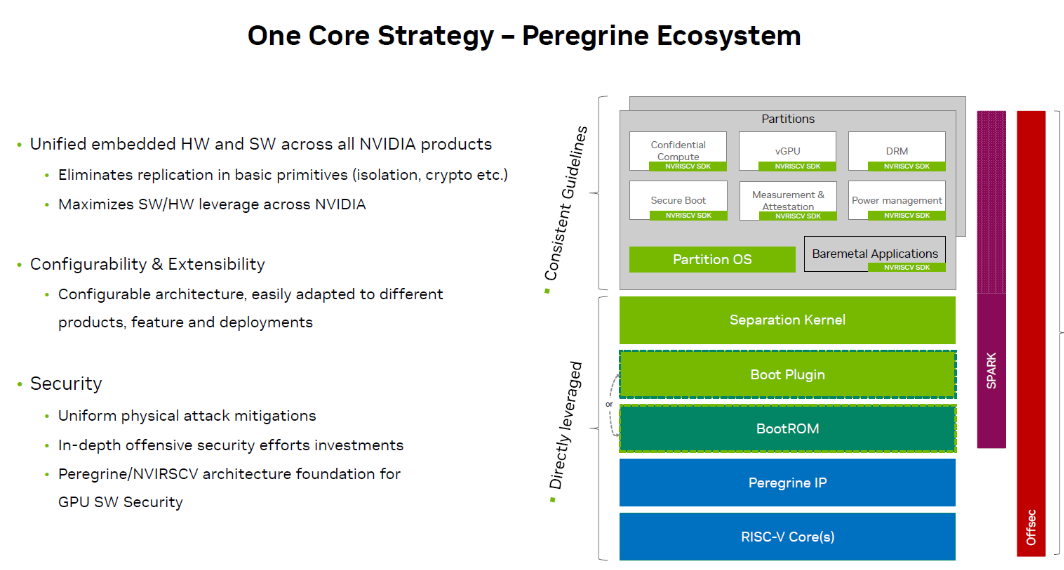

NVIDIA’s SoCs use their own RISC-V subsystem, named Peregrine. On top of RISC-V cores, it includes other peripherals such as DMA and security IP. Peregrine is of crucial importance for NVIDIA as it allows them to pick and reuse any of the 30+ system control and management applications they want to include without needing specific independent development effort each time. The RISC-V architecture allows NVIDIA the flexibility and modularity to configure the subsystem depending on the requirements. for example, they can choose a 32-bit or 64-bit core followed by the specific extensions required for the workload thus maximizing development reuse and return on investment.

Similarly, on the software side, there is a single stack used for all 30+ applications, that allows for significant reuse of items such as the boot, the OS, the separation kernel, and libraries at the application level.

NVIDIA equally aims to make its products as secure as possible and includes the use of an internal offensive security team deployed as ‘hackers’ actively trying to break designs by locating weaknesses, vulnerabilities, and bugs.

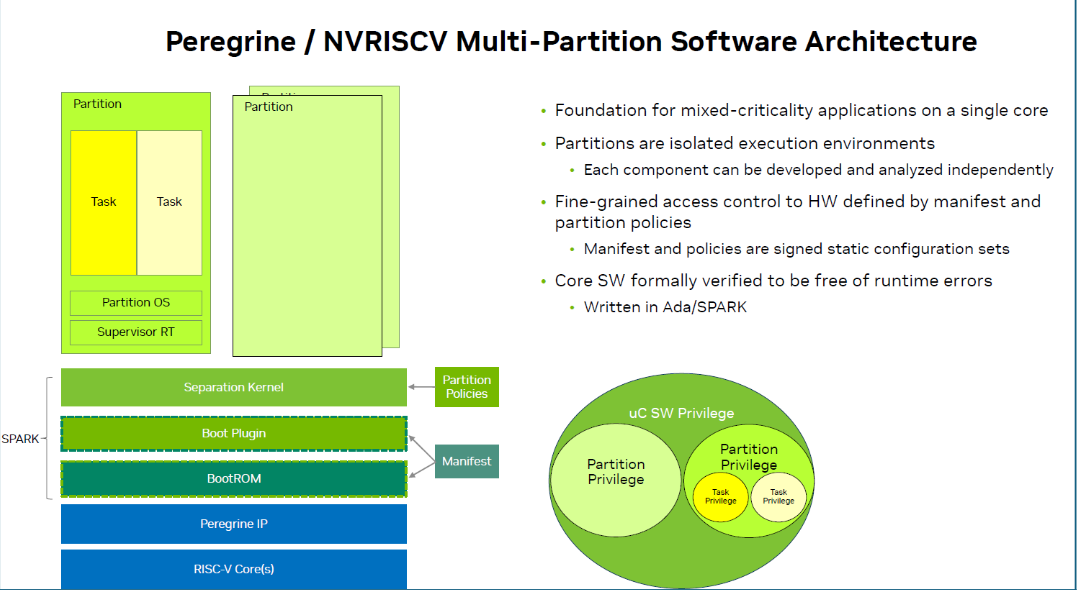

A core component of the Peregrine subsystem is the separation kernel, which can be thought of as a very basic hypervisor system. It divides the system into different pieces that are independent of each other and can be separately verified. This allows the user to run different pieces of software on separate partitions. For example, a safety-compliant application with ASIL-D certification can be run on one partition while another non-certified application can be run independently on another.

Applications

NVIDIA has more than 30 distinct system control and management applications that use a RISC-V core and can be deployed flexibly depending on the specific use case. Two of these applications are detailed below.

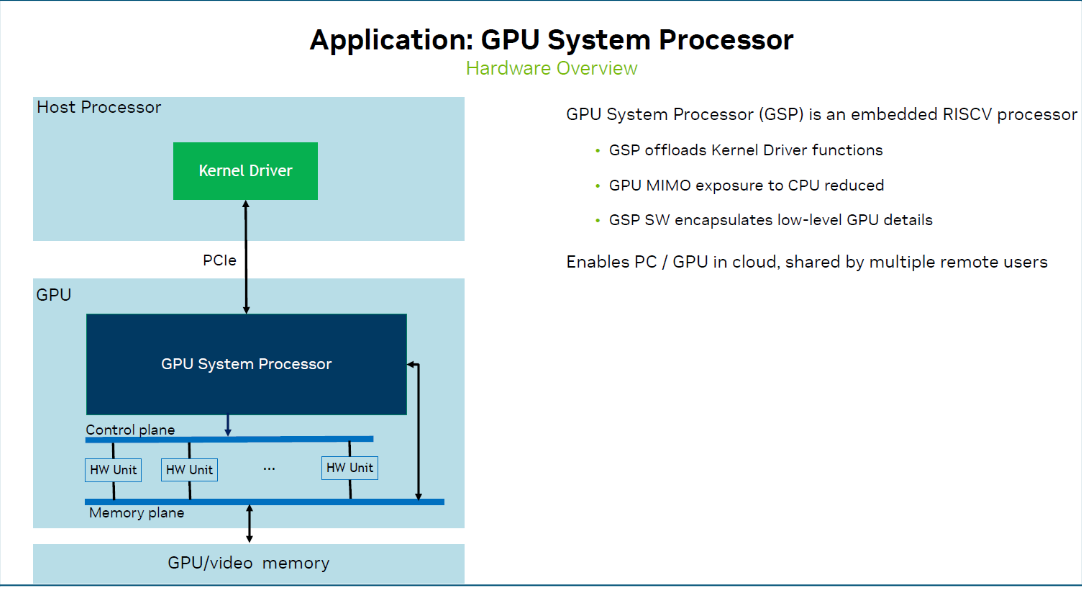

Firstly, the GPU system processor (GSP) has created fundamental changes for NVIDIA as to how they approach their software. The GSP is a processor that sits at the top of the GPU where it creates abstractions of what can be done in the GPU. Instead of the host processor and the kernel driver using individual control registers inside the GPU, they simply talk to the GSP and it translates those higher-level commands into lower-level control register rates.

The GSP Peregrine has a 64-bit RISC-V processor, available in single-hart and multi-hart versions. Most importantly, GSP has full access to everything in the GPU including the memory and display controllers which need to be very carefully managed in the software. From a software perspective, the user can deploy a host processor that has a kernel driver and multiple guest virtual machines. Guest virtual machines have corresponding vGPU runtime partitions on GSP and the separation kernel ensures those are isolated and do not interfere with each other. The resource manager swaps in and out different guests and ensures that allocation is fair. This capability enables specific use cases such as confidential computing where the GPU is handed over to a guest without any impact from the hypervisor. In this case, RISC-V architecture is fundamental to security because of its specific isolation capabilities coupled with NVIDIAs own extension properties.

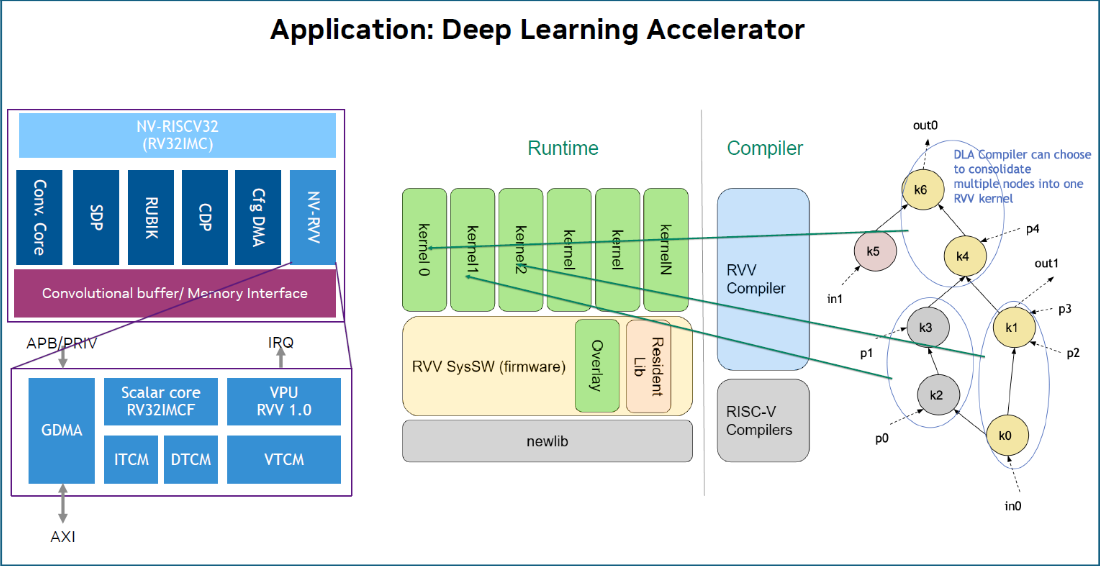

The second NVIDIA RISC-V enabled application described is a Deep Learning Accelerator which forms part of some AI-specific SoCs. This is essentially an inferencing engine that is programmed in graph processing. An example would be an ONNX program that represents a graph of layers processed in a deep learning network. It then uses standard RISC-V compilers that take the kernel code and compile them into an executable. On top of that there is an RVV compiler which turns it into a loadable. It is also possible to combine different kernels into a single kernel for the runtime to achieve the fastest execution.

DLA does not run everything on the RISC-V processor, the main convolutional cores and the matrix multipliers are a separate entity. In the hardware diagram below there are two RISC-V processors one being the control; a simple 32-bit unit, and then there is the vector which is the NVRVV, a 1024bit vector unit. There is a convolutional core and in total six hardware engines. As an example, the Rubik is a smart DMA data transformer that moves the data around while the RISC-V RVV vector processor is used for most of the layers that are not Matrix multipliers. In short it is essentially a full ONNX implementation running on the DLA.

Summary: Why RISC-V works for NVIDIA

In summary there are 5 key reasons why NVIDIA chose the RISC-V ISA and why it has been a successful venture:

- Customization: The ability to customize is key as it allows NVIDIA to maximize the use of the silicon. The RISC-V licensing model allows them to adapt their chips using the basic ISA as a building block and adding extensions and profiles appropriate to the specific application requirements

- Hardware/Software Co-design: this is key as it ensures the hardware is optimised for the software workload to improve efficiency and performance.

- Configuration options: Standard, ‘off the shelf’ processors are often over specified for the application. With RISC-V NVIDIA can save cost and development effort by configuring their implementation using just the specifically required extensions

- Custom Extensions: allow NVIDIA to add their own feature requirements such as specific functionality. security, and/or performance

- One Common HW and SW Architecture: allows NVIDIA to reuse assets across their 30+ applications without having to create or adapt a new architecture for each. Reduces development effort, simplifies deployment and cuts costs.

The full NVIDIA keynote presentation is available on the RISC-V YouTube channel here.