By Kevin McDermott, Vice President of Marketing at Imperas Software Ltd.

Design Verification (DV) test planning using a trusted SoC methodology for RISC-V processor verification including custom extensions

Design Verification (DV) test planning using a trusted SoC methodology for RISC-V processor verification including custom extensions

Introduction

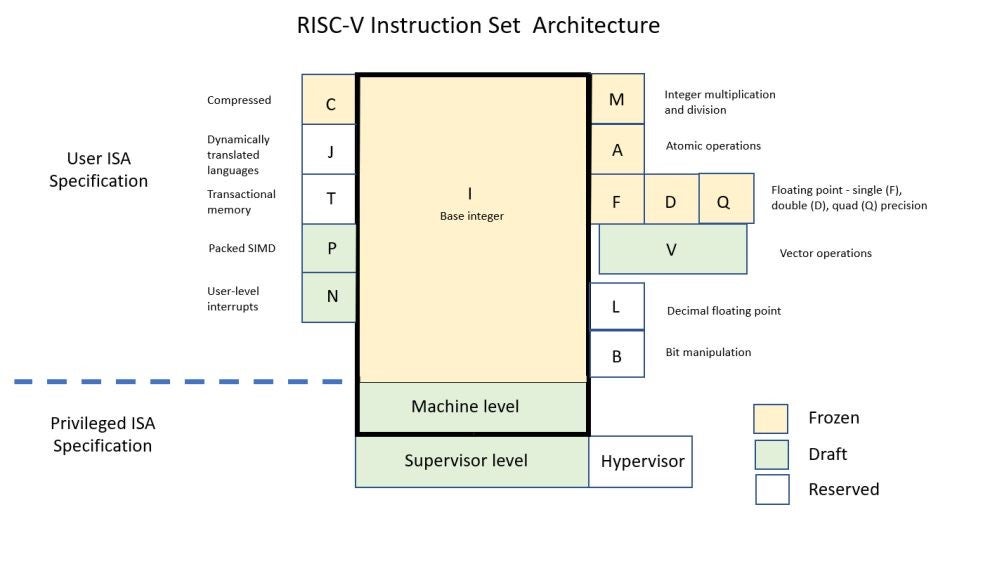

Given that RISC-V is an open instruction set architecture (ISA), a RISC-V processor designer has many implementation and configuration options, plus the freedom of extending the ISA with custom instructions and extensions. While fundamentally a processor is a hardware design with the main objective of correctly executing software, it makes sense that software will play an important role in the overall verification process. This article addresses the latest developments around the use of the RISC-V processor compliance suite, verification testing and is a useful guide for using a reference model-based processor DV flow. While processor verification is not a new topic the market growth around a few proprietary architectures has resulted in today’s SoC design flow becoming based on the assumption of known good processor IP. However, as RISC-V is an open ISA, with many different register-transfer level (RTL) implementations, some level of processor verification is now required by all adopters. Ideally, the verification process should start at the beginning of the design project for a processor implementation. As RISC-V offers a broad array of options and extensions as each item is reviewed and considered for the project, its verification implications should be included in the analysis. This is especially true of any use of custom instructions or extensions. However, if a project has already started or is reviewing the options with some of the many open source cores that are available, these techniques, outlined below, can form the basis of a test and verification plan, or at a minimum, act as an incoming inspection test for any imported IP cores. A RISC-V processor verification project requires four key items:- A test and verification plan

- The RTL to test (DUT – Device Under Test)

- Some tests to run

- A reference model to compare the results against

Compliance

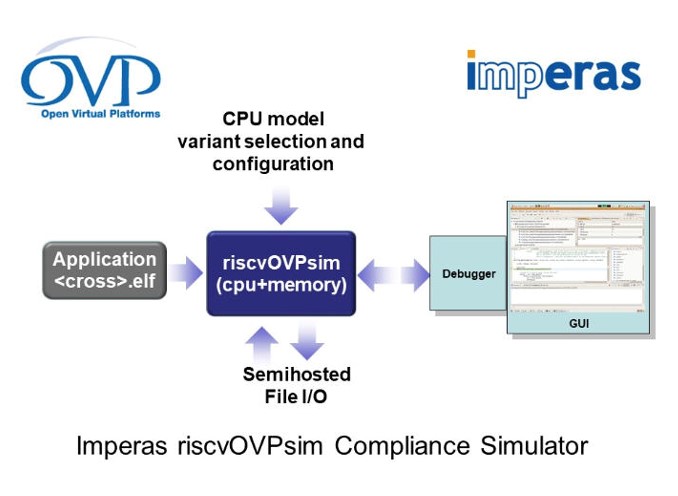

As RISC-V is an open ISA, the compliance tests are essential to confirm the basic operation is in accordance with the specification. While this is a key requirement for the benefit of the software community and the tools/OS ecosystem, compliance is not the same as verification. The compliance requirement is that the basic structure and some basic behaviors are within the envelope of the permitted specification features; it does not exhaustively test all functional aspects of a processor – it confirms that the RTL implementor has read and understood the ISA specifications. A processor by definition is a complex state machine with dynamic interrupts and multiple modes of operation and privilege levels that present many scenarios that are not included as part of the scope of the compliance tests, and so should not be considered as equivalent to a verification test suite. Compliance tests are just one aspect of the complete DV plan. The RISC-V International technical committee working group for Compliance (Compliance Working Group – CWG) includes a number of leading RISC-V members that help and contribute towards the test framework and also the test suites themselves. The latest tests are available here and include the free-to-use riscvOVPsim refence simulator that is used by the CWG in the development of the test suites. As an envelope simulation model, riscvOVPsim supports all of the configuration options of the ratified ISA specification, in addition to the draft specifications for bit manipulation and vectors. Based on the original contributions, in 2019 Imperas donated the full suite of tests for the RV32I compliance tests. These tests, which will be extended over time, should form the basis of any test plan for a RISC-V processor as the first step for any RISC-V based SoC is to ensure compliance with the specification and the reference for the software and tools ecosystem.

Directed Test & Coverage Metrics

As might be expected in the case of custom instructions, a set of directed tests are required to test the full range and expected operation of the new functionality. These tests can be developed from the initial design specification and to provide analysis or profiling with software applications and workloads. From this pool of software tests the most important criteria is the coverage that these tests achieve. As the final test suite will be used extensively during the full DV plan and probably become a foundational part of any future regression testing the coverage and efficiency of the tests need to be assessed. A static coverage analysis can be problematic as a late error in the tests could invalidate the earlier tests. A trivial example is to accumulate interim results in a register but with a final reset that would invalidate the test quality as measured by static methods alone. Using a simulation-based reference model it is possible to set-up the log reports to highlight the coverage results, and with a mutation-based testing and artificially introduced high-level stuck-at fault conditions to ensure the candidate test correctly identified potential faults in the design. Not just as a measure of quality and progress in the test plan the coverage metrics also help rank the various tests or modules to help optimize the test suite for the most coverage as quickly and efficiently as possible, and perhaps eliminate tests which do not increase the coverage. While coverage is not the only consideration for the DV plan, it presents some useful metrics in the drive towards the quality and reliability of the full test suite.Google Open Source Project: RISC-V DV with Instruction Stream Generation (ISG)

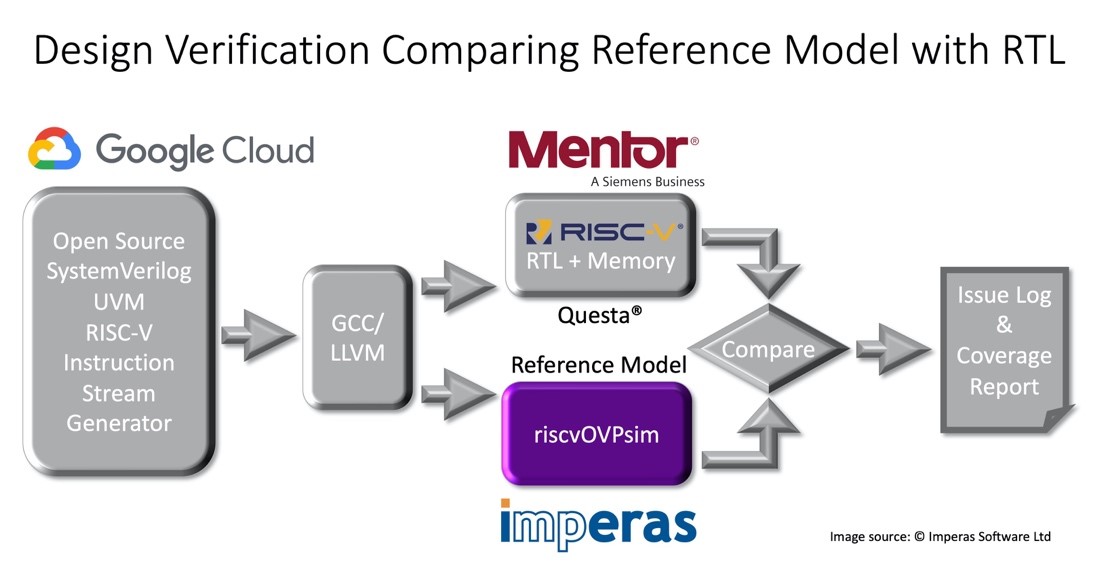

As a processor contains significantly more complex state and potentially many more valid responses than a typical SoC logic block, there is a need to find and test extreme corner cases. A common technique is to employ a random instruction stream generator, which as the name implies generates instructions in a sequence or combination that stress tests the design in unique ways to test rare or unusual conditions. However, a pure random approach would present many illegal and unnecessary combinations and thus be inefficient to use as a basis for the test sequences. For RISC-V, a popular option is the Google open source project, RISC-V ISG, which produces a constrained set of tests. As these ISG approaches generate a large set of tests and results in large output logs and data files, post processing the results to find errors can be inefficient and impractical, as an early failure may invalidate all the subsequent events. Using a reference simulator and golden reference model, such as Imperas offers, allows a much more efficient process and covers all the variabilities across the processor state-space. Plus, having uncovered a discrepancy, it is a natural step to use the model as a guide to evaluate the correct behavior and debug the design issue.

SystemVerilog & Test Benches

SystemVerilog has become the established industry reference for SoC verification. By constructing test benches in SystemVerilog DV engineers can vigorously test an RTL block level functionality. This block level approach is the most common SoC verification applied across the industry including leading IP core providers. SystemVerilog has a significant learning curve and while critics point to this as a fault, it is really a recognition that today’s verification task is both complex and challenging. It is a classic example of blaming the language rather than the problem. In addressing the verification requirements of RISC-V, it may be tempting to rethink the methodology as an abstract or academic discussion. But the practicable approach is to look at the engineering team most likely to undertake RISC-V processor verification – SoC DV teams are facing a tsunami of designs based on RISC-V, many of which have optimized custom extensions. As they are already using SystemVerilog to test the SoC it is a natural goal to expand the scope of work to also address processor verification, using the same fundamental approaches and leveraging their in-house expertise. The key requirement is for a dependable test bench.#1 SystemVerilog Encapsulation: Pre-Test the Test Bench

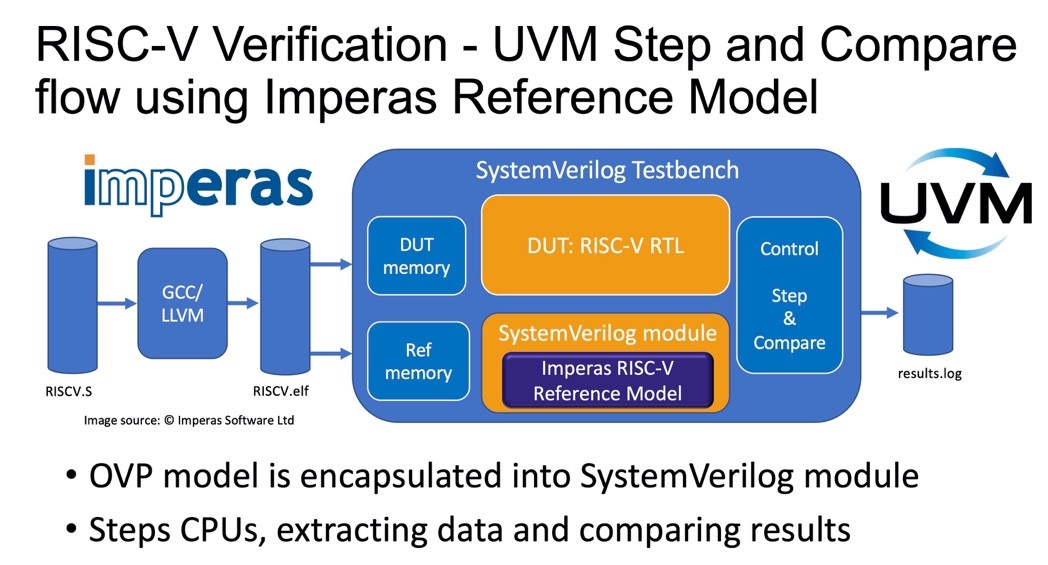

RTL based simulation, while essential for the DV process, incurs the largest amount of resources both in actual test time but also test development. A test bench by definition will be central to the test program and any defects will have consequences in the project timetable or will allow test errors to escape to the next stage of testing. Using a reference model in place of the RTL of the DUT allows the debug and analysis of the test bench with a known good starting point, which helps to verify the correct set-up parameters of the test bench before it is used to undertake the tests with the actual RTL of the DUT.#2 SystemVerilog Encapsulation: Step-and-Compare

By using the reference model encapsulated in a SystemVerilog test bench it is possible to setup the RTL of the DUT in a side-by-side configuration with the same stimulus inputs applied to both and a comparison of the resultant processor internal state at the retirement of each instruction. This arrangement for a step-and-compare operation also provides several benefits:- Interactive debug sessions when an issue is found

- Long simulations can be halted at the point of issue detection

- Asynchronous events and exceptions can be tested easily

- Automated simulation intensive test runs can be halted, avoiding unnecessary or wasted simulation resources

#3 SystemVerilog Encapsulation: Test the SoC & Processor Integration in Hybrid Mode

Having established the verification and test status of the processor, the SoC project plan will include the DV of the rest of the SoC design. Since the reference model is already encapsulated within SystemVerilog, it is possible to use this as a replacement for the actual processor RTL in the test bench as the test plan for the SoC design. This dramatically reduces the simulation loading for the RTL and provides a known good reference for the expected correct processor RTL behavior. Of course, the final stages of verification will be based on the full RTL view of the complete design, but this early hybrid approach helps to accelerate the early phase of the testing and quickly uncovers many issues efficiently. The professional DV community also utilizes other techniques such as formal verification which may prove useful in some aspects for the verification of RISC-V processors. While dynamic interrupts and other unpredictable operational events are best addressed with a simulation-based methodology, a formal approach has traditionally proven useful in some specialized cases. An example is co-processor units for floating point operations, in which a clear mathematical objective may be subjected to functional proof with formal methods by experts with specialist tools, extensive experience and knowledge.How to Get Started with RISC-V Processor DV

Typically, a good place to start a DV plan is in conjunction with the system architect and SoC designers as they look at RISC-V configuration and IP selection. As RISC-V is an open ISA there are now many possible options to source processor IP.#1 RISC-V Processor Verification: Cores Downloaded as Open Source Hardware

Open source hardware has an attractive price, but verification and compliance testing will confirm if it is also good value. Any SoC developer should be familiar with the RISC-V International compliance suite and, as a matter of routine, exercise and test any new core delivery as soon as possible. Some or all of the techniques for RISC-V processor verification may be employed with an open source core, but especially if any modifications are considered to either remove any unrequired functionalities or extend with optimized custom instructions.#2 RISC-V Processor Verification: Cores Received from IP Providers

Many of the newer companies that offer RISC-V based IP are providing a broad range of configuration options. It is prudent for any adopter to thus verify and test the actual final configuration they plan to use in detail before testing the infrastructure and interconnect with the rest of the SoC. As shown above the test bench and methodology of SystemVerilog will be directly useable as a reference as the SoC verification and will accelerate the overall test schedule.#3 RISC-V Processor Verification: Cores Developed Internally

As RISC-V is an open specification ISA one approach may be to plan a unique design targeted at the application requirement and tuned with an optimal set of features and functionality. For complex extensions or custom instructions this may prove to be more efficient than attempting to modify an imported design. A comprehensive DV plan is required for any new processor core.#4 RISC-V Processor Verification: Cores Extended with Custom extensions

One of the attractive features of RISC-V is the opportunity to add optimized extensions or instructions to the processor without compromising the support of the standard base instructions which provide a common platform for the software and tools ecosystem. A natural approach to profiling and analysis of RISC-V custom instructions is to use software driven design and analysis. Using the full application workload, it is possible to profile the options and tradeoffs for custom instructions that retain all the advantages of dedicated hardware for performance, cost and power with all the flexibility of software adaptability. This application code will therefore become part of the functional verification plan. Imperas models and tools support this software driven approach and allow the custom extensions to be incorporated within the model without affecting the quality and reliability of the reference model. In addition to developing the test plan for the custom extensions, it is also recommended to exercise the full compliance suite and test plan for the standard features of the processor to ensure no unintended functionality was affected during the implementation of the custom instructions within the underlying functionality of the base processor.

Summary and Conclusions

All adopters of RISC-V and explorers of optimized custom instructions need to consider the IP options and verification test plan requirements for any SoC based on RISC-V. With the many options and freedoms that RISC-V offers SoC developers, a dedicated plan for processor verification is required as a key part for all successful projects. For more details on RISC-V and Imperas reference models for verification, please visit: http://www.imperas.com/imperas-riscv-solutions.About the Author

Before joining Imperas, Kevin held a variety of senior business development, licensing, segment marketing, and product marketing roles at ARM, MIPS and Imagination Technologies focused on CPU IP and software tools. Previously Kevin was a principal analyst for IoT at ABI Research, focused on connected embedded and IoT, including value chains for IP, SoCs, software, standards and ecosystems. Kevin started his career in custom SoCs for mobile and embedded applications with semiconductor firms in both Europe and Silicon Valley. Kevin received his BSc degree in Microelectronics and Microprocessor Applications from the University of Newcastle upon Tyne, UK.

]]>

Before joining Imperas, Kevin held a variety of senior business development, licensing, segment marketing, and product marketing roles at ARM, MIPS and Imagination Technologies focused on CPU IP and software tools. Previously Kevin was a principal analyst for IoT at ABI Research, focused on connected embedded and IoT, including value chains for IP, SoCs, software, standards and ecosystems. Kevin started his career in custom SoCs for mobile and embedded applications with semiconductor firms in both Europe and Silicon Valley. Kevin received his BSc degree in Microelectronics and Microprocessor Applications from the University of Newcastle upon Tyne, UK.

]]>