We’re excited to share some recent progress in the development of our first-generation RISC-V fusion computing processor core – the X100. X100 has already made significant leaps with its general-purpose computing and application-oriented fusion computing capabilities.

The general-purpose computing performance of the X100 is better than that of the ARM A75 and it outperforms ARM A76 significantly in AI Applications, Vision Applications and Robot Applications.

As a high-performance fusion processor core in RISC-V architecture, the X100 will be used in the production of SpacemiT chips and with application scenarios that have a relatively high demand for computing power. These will include high-performance CPUs, Edge Computing, pan-intelligent robots and Autonomous Driving.

In general-purpose computing, the X100’s single-core benchmark score reaches 7.5 SPECint2k6/GHz, Coremark reaches 7.7/MHz, Dhrystone reaches 6.5DMIPS/MHz and can support up to 16 cores for simultaneous computing.

X100 has also been customized and optimized in fusion computing. Its 16 cores provide a maximum computing power of over 8TOPS@INT8 and in-depth optimizations were made for mainstream Machine Vision algorithms and SLAM algorithms. At the architecture and microarchitecture design level, unlike many other RISC-V cores that improve performance by extending custom instructions, X100 uses native instructions and hardware technologies such as instruction operation fusion to solve the problem of insufficient performance of RISC-V basic instructions.

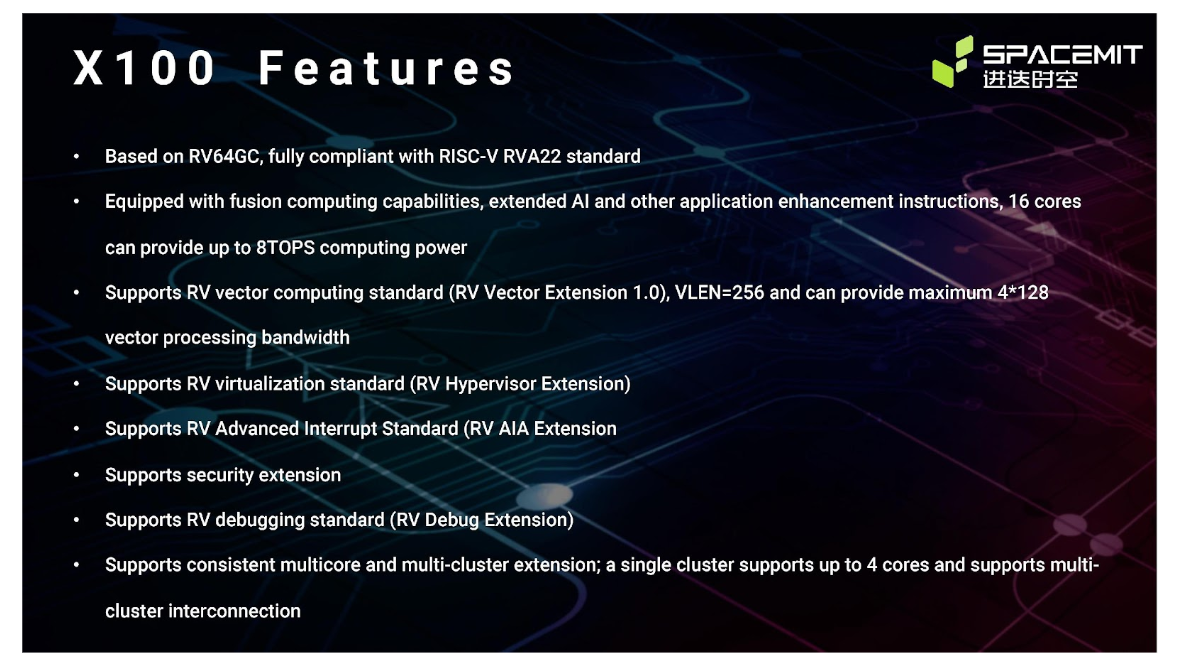

X100 Features

- Based on RV64GC, fully compliant with RISC-V RVA22 standard

- Equipped with fusion computing capabilities, extended AI and other application enhancement instructions, 16 cores can provide up to 8TOPS computing power

- Supports RV vector computing standard (RV Vector Extension 1.0), VLEN=256 and can provide maximum 4*128 vector processing bandwidth

- Supports RV virtualization standard (RV Hypervisor Extension)

- Supports RV Advanced Interrupt Standard (RV AIA Extension

- Supports security extension

- Supports RV debugging standard (RV Debug Extension)

- Supports consistent multicore and multi-cluster extension; a single cluster supports up to 4 cores and supports multi-cluster interconnection

Unique X100 Specifications

X100 has been completely developed and designed in accordance with the RISC-V instruction set standard and has several unique advantages in Vector Computing, Virtualization and Interrupts.

The RV Vector 1.0 version is the latest official version which is not supported by other RISC-V chips currently on the market. In addition, ARM V8 architecture cores such as A75 and A76 only support the Neon extension of SIMD architecture which is inferior to the Vector extension of X100 in terms of computational scalability and software programming flexibility.

Virtualization has always been an important necessity for application scenarios like servers. Because intelligent functions such as Autonomous Driving will become increasingly popular, virtualization technology can provide value for more universal applications. Currently, in supporting virtualization with RISC-V core technology, there is a technical lag in the international industry. However, significant progress has been made and now more virtualization support can be provided in the RISC-V field through X100.

Vector Calculation Support

X100 is equipped with a vector computing engine which is fully compatible with the latest RISC-V Vector V1.0 standard and supports rich data types (INT8/16/32/64, FP16/32/64 & BF16). X100 provides 32 256-bit vector registers and supports a maximum vector processing bit width of 4*128. The vector computing engine adopts a dual-core sharing method which can effectively improve the EER of data processing. In addition, X100 further expands fusion computing instructions based on vector registers which can provide flexible and efficient matrix computing and other capabilities. Different from China’s ARM core, which only has access to Vector computing power and is severely limited due to Wassner protocol control, X100 greatly improves the computing power of the processor through customized optimization of vector architecture.

Virtualization Support

For scenarios such as servers and Automotive chips, X100 supports RISC-V virtualization standards and can run RISC-V virtualization software.

In addition to the above standards, X100 also supports RISC-V bit operation instruction standard B, DEBUG standard, advanced interrupt standard AIA and several other functions.

Innovation in Microarchitecture

X100’s microarchitecture has the advantages of both high performance and high energy efficiency. A series of innovations were made in the microarchitecture of X100 to greatly improve performance.

Instruction Fusion

RISC-V’s basic integer/floating-point instructions (I/F/D standard) have certain inefficiencies compared with ARM because of its simplicity. Many RISC-V processor cores can partially solve this problem by extending instructions but this method can result in the fragmentation of basic instructions. X100 monitors and intelligently integrates instruction sequences through hardware innovation and effectively solves the problem of RISC-V basic instruction inefficiency while also improving instruction execution efficiency. All without losing the simplicity and order of the back-end pipeline.

These instruction sequences include continuous high and low immediate value coalescing, continuous address increment and decrement coalescing, consecutive address access coalescing, consecutive shift coalescing, continuous common ALU operation coalescing, load ALU operation coalescing, load ALU store operation coalescing and specific Deep Fusion optimization. This Deep Fusion technology not only protects the RISC-V basic instruction set and the RISC-V ecology but also greatly improves the performance of programs running on X100.

Data Prefetching

In computing-intensive scenarios, the data access capability of the processor is often the key to efficient computing, especially in scenarios such as AI/image processing where the amount of data far exceeds the cache capacity of the processor. X100 can support data prefetching with Multiple-Data streams and Multiple-Step as it adjusts the frequency and intensity of prefetching according to memory access frequency and access type to ensure efficient use of bus bandwidth resources.

Branch Prediction

The X100 employs a multi-stage hybrid prediction architecture. This includes a zero-latency Next-Line predictor which can predict the jump direction and jump address of conditional branches, absolute jumps and function return instructions every cycle.

A more accurate and larger-capacity post-predictor using TAGE, BTB, RAS and other algorithm structures performs more accurate prediction of branch instructions and correction of the Next-Line predictor at each pipeline level of the prediction architecture.

Energy Efficiency Optimization

The pipeline concurrency of X100 has been significantly optimized. The front-end and back-end bandwidth and pipeline resources are balanced to achieve ultimate three-issue performance. X100 fully customizes the vector pipeline according to the computing power and resource overhead and creatively integrates the vector multi-issue sequence and scalar out-of-order into a unified architecture and shares the scheduling and execution units.

New Breakthrough in Fusion Computing

In order to gain the advantages of data processing efficiency as well as computing energy efficiency and low latency in field switching, the X100 reuses Vector registers as register fields for fusion computing. This includes AI Computing, Visual Processing, and Non Linear Operation. Compared to the public version of the ARM architecture which requires an external NPU to provide AI computing power, the X100’s AI computing is driven by instructions with better programmability, better adaptability to rapidly changing algorithms and relatively lower hardware costs.

AI Computing Power

The X100 uses a unique 2D convolution instruction combined with an innovative memory architecture to accelerate AI applications. Compared with ordinary high-performance processors, the AI instruction set of the processor integrated with AI computing power can provide more than 20 times the computing power of SIMD instructions and more than 10 times performance improvement to the reasoning of the algorithm model.

Compared with the current NPU heterogeneous computing power, the AI deployment software stack with fusion computing power of the processor makes full use of open-source community resources and seamlessly connects to onnxruntime, tflite, pytorch-mobile and other frameworks, thus ensuring that every calculation result is consistent with open-source software. Algorithm deployment does not require learning or adapting hardware-specific AI software stacks. It can be quickly applied, allowing users to focus on the AI algorithm itself during product development. The process and habits of developing AI applications are consistent with traditional CPU applications. This gets rid of the additional complexity of hardware and software debugging brought about by heterogeneous hardware which can greatly shorten the development cycle of AI applications.

Visual Processing Capabilities

Processors frequently participate in image pre/post-processing in visual applications. X100 can improve visual computing performance such as resize, affine and ColorCvt by more than 35% and can reach more than 50% through customization of instructions and microarchitecture.

Computing Capability of Nonlinear Solver

The SLAM algorithm is the core for applications such as robots/AR/VR. Nonlinear optimization is currently the most mainstream state estimation algorithm in the SLAM industry and it is also the core of SLAM calculations. X100 improves the performance of nonlinear solutions such as BA (Bundle Adjustment) by more than 30% through instruction and microarchitecture customization.

Future Applications of X100

As an open-source instruction architecture, RISC-V is equivalent to authorizing the instruction architecture to the chip company. SpacemiT makes full use of the open source and open features of RISC-V to customize and optimize the instruction set and microarchitecture.

Thanks to all these breakthroughs in general-purpose and fusion computing capabilities, the X100 is ideal for use in applications such as Edge Servers, High-end Intelligent Robots and Autonomous Driving. Although there is no lack of RISC-V computing cores on the market, domestic high-performance RISC-V cores that can be truly commercialized are still very rare. The X100 has reached new heights domestically in terms of its specifications, performance and integrated computing power.

The high-performance SoC that will be equipped with the X100 processing core is now under independent development by SpacemiT along with our technical cooperation with various other partners. The SpacemiT team will provide the industry with RISC-V computing chips that bring more powerful computing power and superior performance.