Introduction

Various methods are used for design verification, including simulation, emulation, and formal verification. While simulation and emulation are effective at identifying bugs, they may overlook corner case and simulation-resistant bugs unless specifically targeted. Moreover, achieving high coverage through simulation requires generating thousands or even millions of testbenches and simulating the design for millions of cycles. In contrast, formal verification conducts exhaustive testing, eliminating chances of undetected bugs.

In recent years, the adoption of RISC-V has increased, driven by its open-source nature, it allows companies to design and build their own custom implementation of a RISC-V processor – ranging from non-pipelined cores to out-of-order, multi-core, super-scalar architectures. The flexibility is one of RISC-V’s greatest strengths, but it also introduces significant verification challenges.

The Challenge

While building custom processors using RISC-V Instruction Set Architecture (ISA) can be rewarding, it also presents significant verification challenges. Many designers leverage verified open-source designs to reduce the verification efforts. However, modifications are almost always required to meet specific power, performance and area (PPA) requirements. These changes, which are often proprietary, create countless new scenarios that must be tested thoroughly and are the most critical and error-prone, which can potentially introduce subtle corner-case bugs.

In today’s highly competitive market, companies push the limits to deliver high-performance cores faster than ever. This puts pressure on designers to apply more advanced optimizations to meet the PPA requirements. At the same time, time-to-market is a critical factor in the success of a product. Together, these factors make verification the central challenge in the development process. There are two primary verification methods-simulation and formal verification- each offer unique advantages but also introduce distinct complexities that must be carefully dealt with.

Simulation-based approaches

In simulation-based approaches, verification engineers need to write or randomly generate input stimuli to test specific behaviours. A significant amount of effort goes into identifying interesting scenarios and writing required input stimuli to drive the design to the desired states-usually before writing any actual test. This increases the time required to setup a simulation testbench and makes the probability of missing a corner case much higher.

Simulation effectiveness is tightly coupled to the quality of the test plan, and exploring the full state space, may require millions of tests. Even with a 100% test plan execution and functional coverage, untested scenarios may still hide critical bugs. This means, large and expensive compute farms are required to keep running the millions of tests and regressions in the hope of finding bugs. These runs can take days, weeks or even months in extreme cases.

Formal Verification

In formal verification, engineers write assertions that test behaviour rather than specific scenarios as in simulation-based approaches. This relieves engineers from identifying corner-cases and can exhaustively test the state-space and capture critical bugs that are often missed in simulation due to the complexity of the scenario that is not covered with a targeted test. Although Formal Property Verification (FPV) can be seen as more effective than simulation in terms of finding corner-case bugs and gaining confidence that the design is 100% bug-free, it also comes with its own challenges.

- Engineers should develop a set of constraints that protects the design from going into illegal states. Developing such constraints can be quite time-consuming.

- In data-intensive and complex designs, FPV tend to not converge due to the state-space explosion that could happen if the necessary abstractions are not done.

- Getting FPV training and being able to do effective bug hunting is a time-consuming process. Not all companies can have their Design Verification (DV) team spare multiple hours or even days every month for a training program.

- Commercially available tools are often seen as more expensive compared to other EDA tools used in other steps of the development chain.

- Complex and in-depth knowledge of the field is required to tackle high proof complexity issues for complex designs.

To put things into perspective let’s assume one simulation testbench takes just 1 nanosecond to execute. If a design implements the following simple expression:

F = A & B

There are four possible input combinations- meaning 4 runs of the simulation testbenches and 4 nanoseconds to test all cases.

Now, add one more input variable:

F = A & B & C

This doubles the combinations to 8, requiring 8 nano seconds. If we add another input, the total increases to 16. In fact, the number of required tests doubles with each new input, following an exponential growth: 2n, where n is the number of inputs.

For a design with just 100 input variables (or bits), we would need: 2100 ≈ 1.27 × 1030 runs – which would take over 40 trillion years to simulate, even at 1 nanosecond per test. That’s roughly 3000 times the age of the universe. In contrast, formal verification can often reason about all these states symbolically and, depending on the complexity, complete the proof in minutes instead of millennia.

RISC-V and Formal Verification

RISC-V processors are not different than other digital designs and can benefit from the power formal methods. Verifying a full processor with formal can be a challenging task for both the engineer and the formal engines. At LUBIS EDA, our philosophy for tackling complex processor verification is simple: divide and conquer. With smart abstractions and a gated process, we can catch everything from simple implementation bugs in instructions to complex corner-case issues. These harder-to-find bugs, which often require specific instruction sequences and register values, are frequently missed by simulation-based approaches.

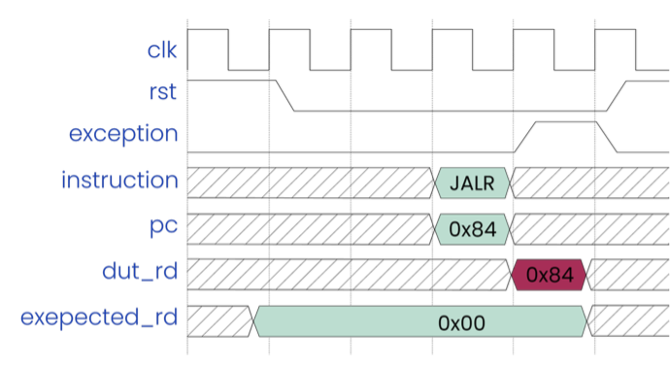

An example of a bug that was missed by simulation-based approaches is an issue in the implementation of the BLT instruction in an RV32I core, which sets the Program Counter (PC) to a target address if the condition is met. It was discovered that the design checks for a misalignment in the target address before evaluating the condition. This implementation led the design to generate an address misalignment exception where it should not because the condition was false, thus no misalignment check should take place. Due to the nature of formal verification that checks the behaviour rather than a specific case, such a bug was discovered without specifically checking this scenario. In contrast, to discover this bug in a simulation-based approach, this case should be specifically targeted with a testbench, which is not an obvious case that would be tested.

Figure 1 Example bug in BLT instruction

With the right planning, methodology, and engineering mindset, formal verification can be successfully applied to all types of digital designs—even complex processors like RISC-V cores. But to make this approach scalable, repeatable, and accessible across teams, you need more than just expertise—you need the right tooling.

At LUBIS EDA, we’re building a new class of formal-centric EDA tools that simplify and accelerate the process of applying formal verification in real-world design environments.

Formal Made Practical: The LUBIS EDA Toolchain

The software industry always seeks new tools and methodologies to produce high-quality software with minimal effort in repetitive tasks. To achieve that, any piece of code that goes into production must be tested. After implementing a new feature, a set of tests are triggered to make sure that the new features are working as intended and no bugs were introduced due to the new features.

In the software world, such tests can run using infrastructure that already exists within the famous version control frameworks like GitLab or GitHub.

In hardware development and specifically formal verification, this level of automation is still rare, such tooling is not available out of the box.

At LUBIS EDA, our mission is to bring proven software industry practice into the formal verification domain – reducing setup effort, improving usability, and accelerating bug discovery through smart automation and tooling designed for formal.

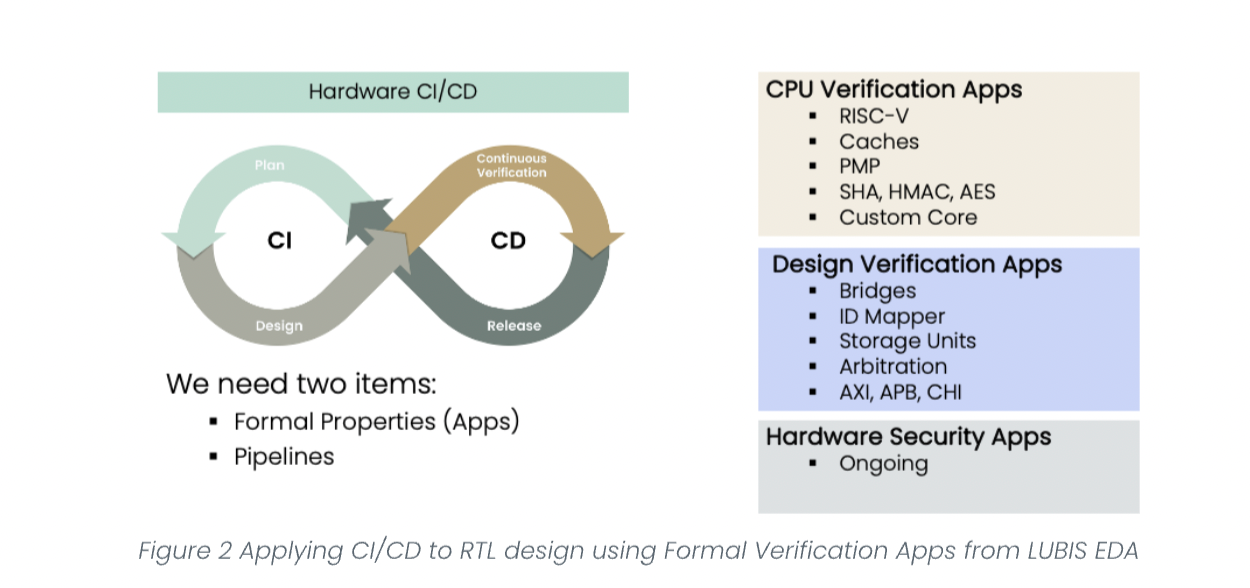

If compared to the CI/CD methodology used in software, formal properties can act as unit tests in hardware. With this, formal verification pipelines can be triggered once the design is updated, triggering a formal property suite that can catch bugs that might have been introduced.

With LUBIS EDA’s formal regression tool, all you need is your design and to choose a set of verification apps—either customized by us for your needs, written by you or picked from our growing library. After that, the tool takes care of everything else. From generating load scripts for the formal tools, to notifying the user about any failure and generating detailed and formal friendly reports about the status of the regression run.

This allows users to stay on top of their verification efforts and be able to identify a bug the moment it was introduced and act on it right away. Enabling more users to use formal verification and keep the quality of the design at its highest.

Verifying a RISC-V core with LUBIS EDA’s toolchain

A RISC-V core was used for this experiment which is based on the IBEX core developed by lowRISC. The core is 32-bit with the I-Extension. A RISC-V formal verification IP was picked from the library and connected to the design. From there, LUBIS EDA’s regression tool took over—automatically running checks with four different formal tools whenever the design changes.

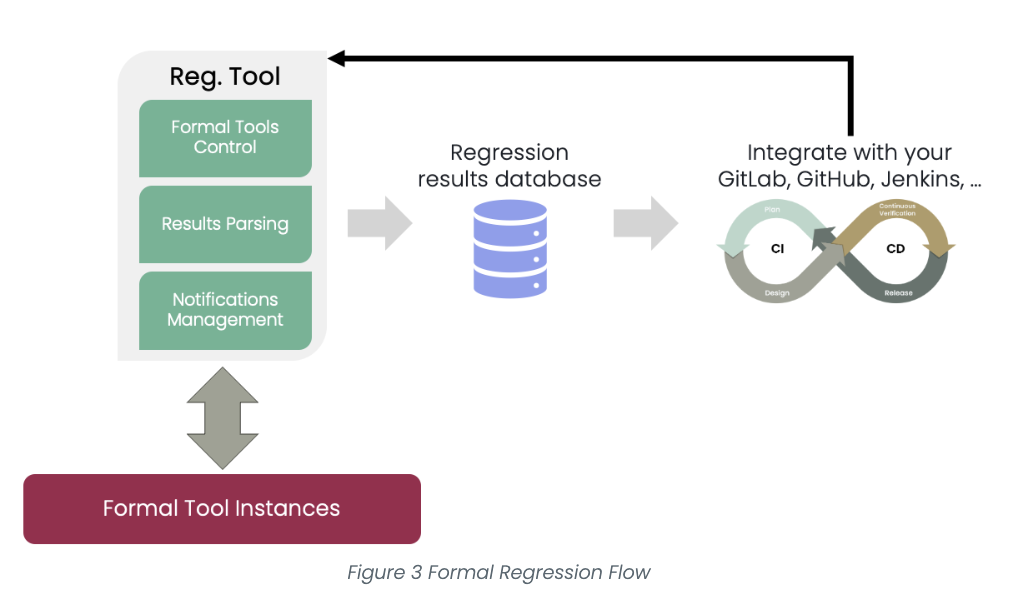

Figure 3 illustrates the formal regression flow. It shows how the formal regression tool manages formal tools, parses results, and handles notifications. This flow can be integrated into existing CI/CD pipelines, ensuring that only bug-free designs make it to production—thanks to formal verification.

Bug findings through Formal Verification in the IBEX core

Throughout several design iterations, our regression with a RISC-V verification IP was able to capture the following bugs right when they were introduced:

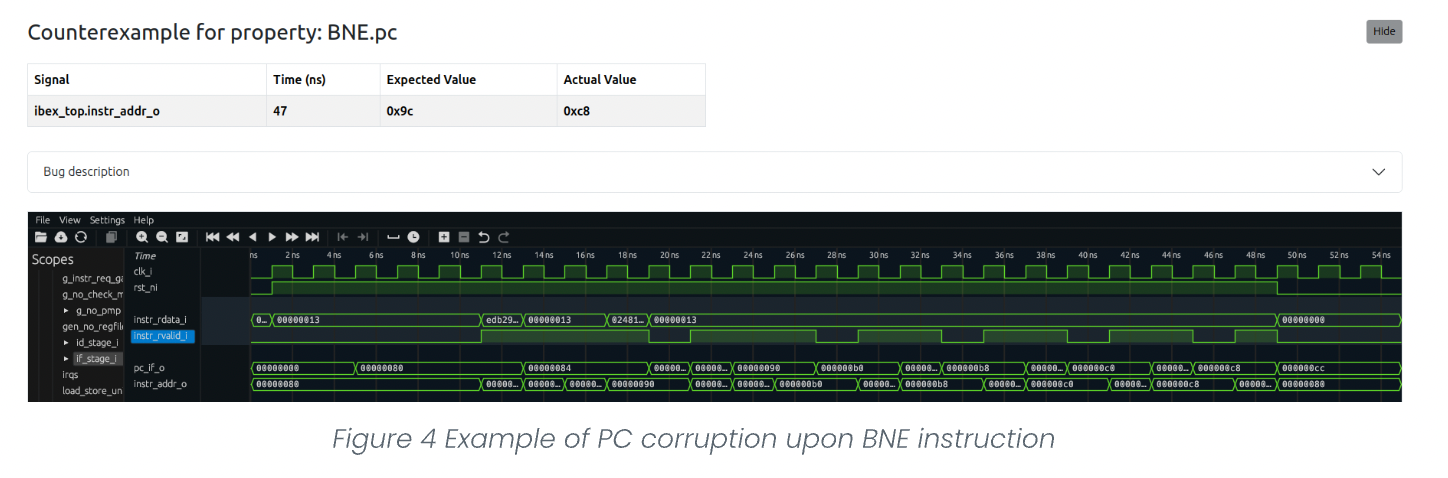

Corruption of the register file and PC upon conditional instructions

The found bug resulted in updating the register file and program counter (pc) with the wrong values upon conditional instructions such as branches, SLT, etc.

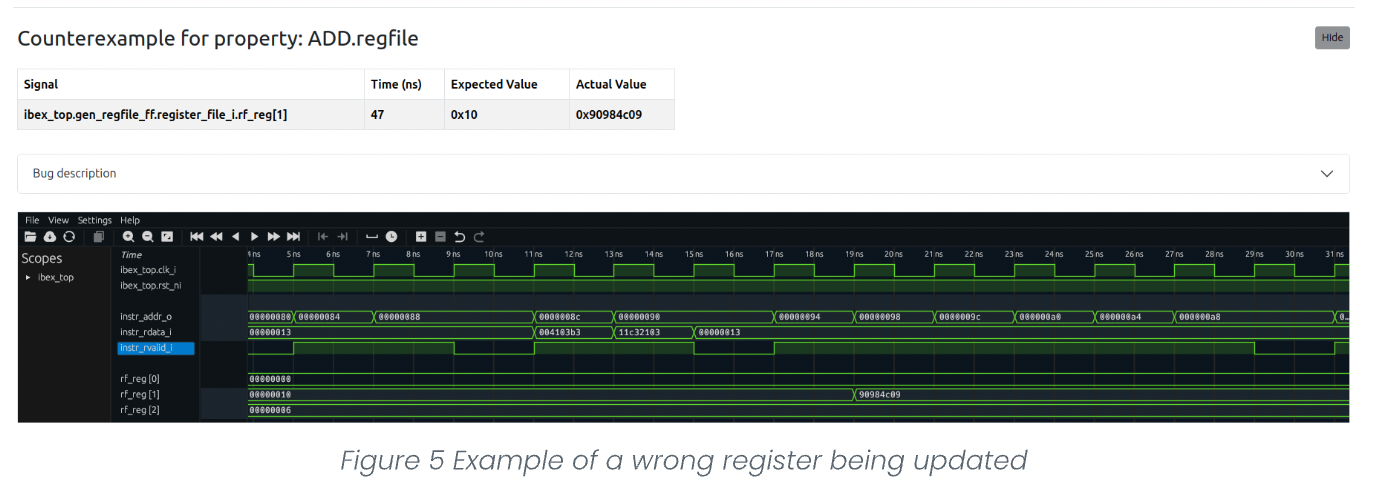

Update the value of the wrong register in the register file

Update the value of the wrong register in the register file

The found bug resulted in updating the wrong register, such that the register being updated is either (intended_reg+1) or intended_reg-1.

About LUBIS EDA

With years of experience in formal verification, standardized formal sign-off methodology and a proven track record, LUBIS EDA specializes in supporting the design of bug-free chips, ensuring timely tape-out deadlines are met, eliminating any setbacks caused by delayed bug captures. As a leading formal verification service provider worldwide, LUBIS EDA has collected the pain points of manual formal verification throughout their extensive hands-on experience. Allowing to streamline the process by introducing unique solutions that significantly enhance the efficiency of formal verification, automating most tasks involved.