This post was originally published on the MEEP (MareNostrum Exascale Emulation Platform) website.

John Davis, MEEP project coordinator, explains how the European project MEEP will develop an open source digital library for the RISC-V ecosystem in HPC, its hardware and software developments and a performance modeling tool called Coyote.

MEEP is a digital laboratory that enables us to build hardware that does not yet exist to test ideas on Hardware. It will be fast enough to enable software development and testing of software at scales not possible in software simulators. MEEP, will provide the opportunity to build a small piece of the future and demonstrate it on Hardware and Software at a larger scale.

Moreover, it will provide a foundation for building European-based chips and infrastructure to enable rapid prototyping using a library of IPs and a standard set of interfaces to the Host CPU and other FPGAs in the system using a set of standard interfaces defined as the FPGA shell. Open-Source IPs will be available to be used for academic purposes, and/or be integrated into a functional accelerator or cores for traditional and emerging HPC applications.

Hardware development

MEEP’s goal is to capture the essential components of a chiplet accelerator in the FPGA, part of a self-hosted accelerator, and replicate that instance multiple times. These can be:

- Memory: All memory components will be distributed creating a complex memory hierarchy (from HBM and the intelligent memory controllers down to the scratchpads, and L1 caches).

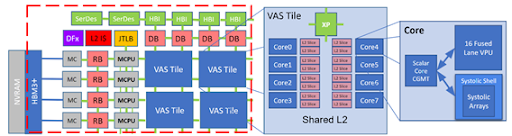

- Computation: the computational engine is a RISC-V Accelerator (VAS Tile, below), in which its main computational unit is called VAS (Vector And Systolic) Accelerator Tile.

The ACME architecture overview:

In the accelerated computational engine, each VAS Accelerator Tile is designed as a multi-core RISC-V processor, capable of processing both scalar, vector, and systolic array instructions. Within the VAS Tile, each core is a composition of three elements: a scalar core and three coupled co-processors, two shown in the diagram above and the third associated with the memory controller.

Coyote

MEEP has developed Coyote, a performance modeling tool able to provide an execution driven simulation environment for multicore RISC-V systems with multi-level memory hierarchies. In this context, MEEP developed Coyote to provide the following performance modeling tool capabilities:

- Support for RISC-V ISA, both scalar and vector instructions.

- Model deep and complex memory hierarchies structures.

- Multicore support

- Simulate high throughput scenarios.

- Leverage with existing tools

- Include scalability, flexibility and extensibility features to be able to adapt to different working context and system characteristics.

- Easy to enable different architectural changes, in order to provide an easy-to-use mechanism to compare new architectural ideas.

Coyote, is a combination of Sparta and SPIKE. It focuses on modeling data movements throughout the memory hierarchy. This provides sufficient detail to perform:

- First-order comparison between different design points.

- The behaviour of memory accesses.

- The well-known memory-wall.

Coyote can currently simulate architectures with a private L1 instruction and data cache per core and a shared, banked L2 cache. Their size and associativity can be configured. For the L2, the maximum number of in-flight misses and the hit/miss latencies can also be configured. Two well-known data mapping policies have been implemented using different bits of the addresses: page to bank and set interleaving.

Current work has set a solid foundation for a fast and flexible tool for HPC architecture design space exploration.

Next steps are:

- To extend the modelling capabilities of Coyote, including modelling the main memory, the NoC, the MMU and different data management policies.

- Data output and visualization capabilities will also be extended to enable finer grain analysis of MEEP extends the traditional accelerator system architecture beyond the traditional CPU-GPU systems by presenting a self-hosted accelerator.

Software development

The MEEP software stack is changing the way we think about accelerators and which applications can execute efficiently on this hardware. At the Operating System (OS) level, two complementary approaches will be considered for the MEEP hardware accelerators to work efficiently with the rest of the software stack:

1) Offloading of accelerated kernels from a host device to an accelerator device, similar in spirit to how OpenCL works.

2) Natively execute a fully-fledged Linux distribution running on the accelerator that will allow the native execution in the accelerator of most common software components, pushing the accelerator model beyond the traditional offload model explored in the first option.

The software components that will be used are: LLVM, OpenMP, MPI, PyCOMPSs/COMPS, TensorFlow, Apache Sparks.

HPC Ecosystem on RISC-V

In addition to RISC-V architecture and hardware ecosystem improvements, MEEP will also improve the RISC-V software ecosystem with an improved and extended software tool chain and software stack, including a suite of HPC and HPDA (High Performance Data Analytics) applications. The goal is to provide an accelerator that can be:

-

- Self-hosting: Consists of scalar processors such as RISC-V compatible CPUs to execute the OS as well as sequential operations, it also has coprocessors designed for specific workloads exhibiting a high degree of parallelization.

- Scalable: The number of resources that are available within the MEEP accelerator can be adapted and it depends on the area requirement of the target configuration.

- Flexible: It is to be deployed on Xilinx’ Alveo U280 Data Center Accelerator. It consists of an FPGA with integrated up to 8GB High Bandwidth Memory (HBM), PCIe (Gen 3, 16 lanes) and two Ethernet QSFP28 interfaces, able to deliver 100Gbps each.

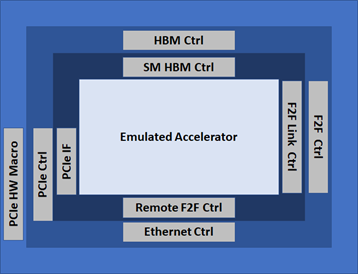

To access the hardware modules, MEEP utilizes an FPGA Shell as shown below:

FPGA Shell interfaces and layers for Host Communication (PCIe), Network (Ethernet), Memory (HBM), and FPGA-2-FPGA (F2F) communication.

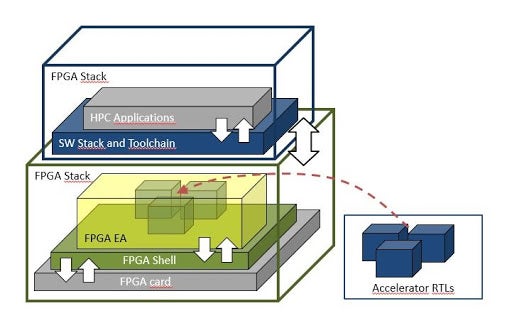

The figure below puts everything together. MEEP uses the RTL to describe the architecture of the accelerator and maps the emulated accelerator (EA) into the FPGA. The EA connects to the FPGA shell for connectivity to I/O and memory. Finally, the software stack runs on top of the EA in the FPGA, enabling software development and system research and development.

MEEP Ecosystem layers:

John D. Davis is the director of LOCA, the Laboratory for Open Computer Architecture, and the PI for MEEP at the BSC. He has published over 30 refereed conference and journal papers in Computer Architecture (ASIC and FPGA-based domain-specific accelerators, non-volatile memories and processor design), Distributed Systems, and Bioinformatics. He also holds over 35 issued or pending patents in the USA and multiple international filings. He has designed and built distributed storage systems in research and as products. John has led the entire product strategy, roadmap, and execution for a big data and analytics company. He has worked in research at Microsoft Research, where he also co-advised 4 PhDs, as well as large and small companies like Sun Microsystems, Pure Storage, and Bigstream. John holds a B.S. in Computer Science and Engineering from the (University of Washington) and an M.S. and Ph.D. in Electrical Engineering (Stanford University).